实验目的

熟悉如何使用Java API操作Hive

实验原理

Hive有两种操作方式,Shell和Java API。

NOTE。步骤2-9必须按照严格的顺序执行,后面的步骤基于前面的步骤的结果,如果哪一步出错且对数据库造成影响,建议执行步骤8,9后再从步骤2开始。

实验步骤

步骤1.启动Hadoop和Hive.

#start-dfs.sh

进入Hive目录后,开启Hive远程接口。执行完之后不能关闭终端。

#cd /usr/local/hive

#bin/hive --service hiveserver2

步骤2.打开eclipse,在eclipse中File->New->Other->Map/Reduce->Map/Reduce Project,新建项目HiveWithJDBC,然后新建包hiveexamples.选中HiveWithJDBC,右键Properties->Java Build Path->Libraries->Add External JARs,将/usr/local/hivejars文件夹下的所有jar包导入,然后Apply and Close。

步骤3.新建文件HiveCreateDb.java,编写Java代码通过JDBC创建数据库。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveCreateDb {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/default", "", "");

Statement stmt = con.createStatement();

stmt.execute("CREATE DATABASE userdb");

System.out.println("Database userdb created successfully.");

con.close();

}

}

步骤4.测试。

选择,右键Run as->Run on Hadoop,查看输出结果。如果输出Database userdb created successfully.则成功执行。

步骤5.新建文件HiveCreateTable.java,编写Java代码通过JDBC新建表。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveCreateTable {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/userdb", "", "");

// create statement

Statement stmt = con.createStatement();

// execute statement

stmt.execute("CREATE TABLE IF NOT EXISTS "

+" employee ( eid int, name String, "

+" salary String, destignation String)"

+" COMMENT 'Employee details'"

+" ROW FORMAT DELIMITED"

+" FIELDS TERMINATED BY '\t'"

+" LINES TERMINATED BY '\n'"

+" STORED AS TEXTFILE");

System.out.println("Table employee created.");

con.close();

}

}

重复步骤4测试。

步骤6.新建文件HiveLoadData.java,编写Java代码通过JDBC加载数据到表中。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveLoadData {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/userdb", "", "");

// create statement

Statement stmt = con.createStatement();

// execute statement

stmt.execute("LOAD DATA LOCAL INPATH '/usr/local/hive/sample.txt'" + "OVERWRITE INTO TABLE employee");

System.out.println("Load Data into employee successful");

con.close();

}

}

在开始执行之前,先要创建加载的数据文件。在/usr/local/hive下创建文件sample.txt。

# cd /usr/local/hive

# touch sample.txt

# gedit sample.txt

在打开的文件中输入以下内容,以tap键作为分隔符:

1 zhao 3300 manager

2 qian 1200 assistant

3 li 1200 assistant

重复步骤4测试。

步骤7.编写Java代码通过JDBC修改表。

新建文件HiveAlterAddColumn.java,实现添加列的功能。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveAlterAddColumn {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/userdb", "", "");

// create statement

Statement stmt = con.createStatement();

// execute statement

stmt.execute("ALTER TABLE employee ADD COLUMNS " + " (dept STRING COMMENT 'Department name')");

System.out.println("Add column successful.");

con.close();

}

}

重复步骤4测试。

新建文件HiveAlterChangeColumn.java,实现更改列名的功能。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveAlterChangeColumn {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/userdb", "", "");

// create statement

Statement stmt = con.createStatement();

// execute statement

stmt.execute("ALTER TABLE employee CHANGE name ename String");

stmt.execute("ALTER TABLE employee CHANGE salary salary Double");

System.out.println("Change column successful.");

con.close();

}

}

重复步骤4测试。

新建文件HiveAlterRenameTo.java,实现重命名表的功能。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveAlterRenameTo {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/userdb", "", "");

// create statement

Statement stmt = con.createStatement();

// execute statement

stmt.execute("ALTER TABLE employee RENAME TO empl");

System.out.println("Table Renamed Successfully");

con.close();

}

}

重复步骤4测试。

步骤8.新建文件HiveDropTable.java,编写Java代码通过JDBC删除表。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveDropTable {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/userdb", "", "");

// create statement

Statement stmt = con.createStatement();

// execute statement

stmt.execute("DROP TABLE IF EXISTS empl");

System.out.println("Drop table successful.");

con.close();

}

}

重复步骤4.

步骤9.新建文件HiveDropDb.java,编写Java代码通过JDBC删除数据库。

package hiveexamples;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class HiveDropDb {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

// Register driver and create driver instance

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// get connection

Connection con = DriverManager.getConnection("jdbc:hive2://localhost:10000/default", "", "");

Statement stmt = con.createStatement();

stmt.execute("DROP DATABASE userdb");

System.out.println("Drop userdb database successful.");

con.close();

}

}

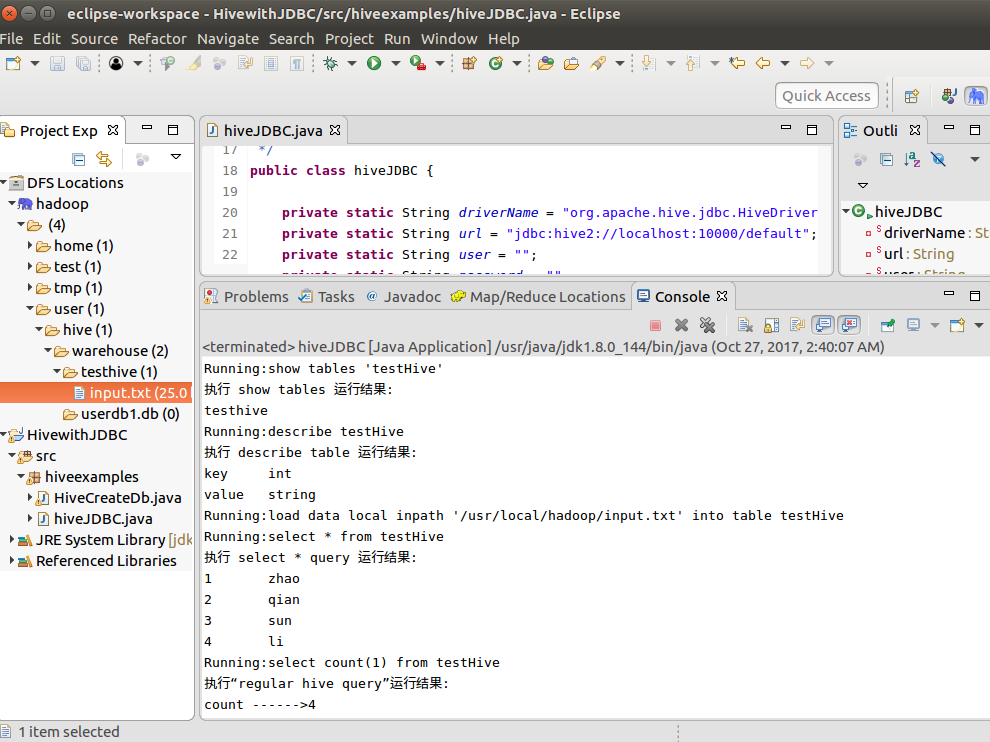

步骤10.新建文件hiveJDBC.java,该文件是总结性的测试代码。

package hiveexamples;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

import org.apache.log4j.Logger;

/**

* Hive的JavaApi

*

* 启动hive的远程服务接口命令行执行:hive --service hiveserver2

*

*

*/

public class hiveJDBC {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

private static String url = "jdbc:hive2://localhost:10000/default";

private static String user = "";

private static String password = "";

private static String sql = "";

private static ResultSet res;

private static final Logger log = Logger.getLogger(hiveJDBC.class);

public static void main(String[] args) {

Connection conn = null;

Statement stmt = null;

try {

conn = getConn();

stmt = conn.createStatement();

// 第一步:存在就先删除

String tableName = dropTable(stmt);

// 第二步:不存在就创建

createTable(stmt, tableName);

// 第三步:查看创建的表

showTables(stmt, tableName);

// 执行describe table操作

describeTables(stmt, tableName);

// 执行load data into table操作

loadData(stmt, tableName);

// 执行 select * query 操作

selectData(stmt, tableName);

// 执行 regular hive query 统计操作

countData(stmt, tableName);

} catch (ClassNotFoundException e) {

e.printStackTrace();

log.error(driverName + " not found!", e);

System.exit(1);

} catch (SQLException e) {

e.printStackTrace();

log.error("Connection error!", e);

System.exit(1);

} finally {

try {

if (conn != null) {

conn.close();

conn = null;

}

} catch (SQLException e) {

e.printStackTrace();

}

}

}

private static void countData(Statement stmt, String tableName)

throws SQLException {

sql = "select count(1) from " + tableName;

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行“regular hive query”运行结果:");

while (res.next()) {

System.out.println("count ------>" + res.getString(1));

}

}

private static void selectData(Statement stmt, String tableName)

throws SQLException {

sql = "select * from " + tableName;

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行 select * query 运行结果:");

while (res.next()) {

System.out.println(res.getInt(1) + "\t" + res.getString(2));

}

}

private static void loadData(Statement stmt, String tableName)

throws SQLException {

String filepath = "/usr/local/hadoop/input.txt";

sql = "load data local inpath '" + filepath + "' into table "

+ tableName;

System.out.println("Running:" + sql);

stmt.execute(sql);

}

private static void describeTables(Statement stmt, String tableName)

throws SQLException {

sql = "describe " + tableName;

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行 describe table 运行结果:");

while (res.next()) {

System.out.println(res.getString(1) + "\t" + res.getString(2));

}

}

private static void showTables(Statement stmt, String tableName)

throws SQLException {

sql = "show tables '" + tableName + "'";

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行 show tables 运行结果:");

if (res.next()) {

System.out.println(res.getString(1));

}

}

private static void createTable(Statement stmt, String tableName)

throws SQLException {

sql = "create table "

+ tableName

+ " (key int, value string) row format delimited fields terminated by '\t'";

stmt.execute(sql);

}

private static String dropTable(Statement stmt) throws SQLException {

// 创建的表名

String tableName = "testHive";

sql = "drop table " + tableName;

stmt.execute(sql);

return tableName;

}

private static Connection getConn() throws ClassNotFoundException,

SQLException {

Class.forName(driverName);

Connection conn = DriverManager.getConnection(url, user, password);

return conn;

}

}

步骤11.重复步骤4,查看结果。